So finally, after a week of articles (Parts One, Two, Three), I'm ready to talk about the big new idea behind both Intel's (Nasdaq: INTC) "Merced" design and Transmeta's "Crusoe." It is... putting more processor cores on each chip! Oooooh.

VLIW

The real trick to adding more processor cores, of course, is figuring out how to make use of more cores at the same time, and once again it involves rethinking how instructions get fed into them. For most of the past decade, a good technique for doing this was percolating through a similar mix of universities and corporate labs that brought us RISC (Reduced Instruction Set Computing), and it was doing so under the name VLIW (Very Long Instruction Words). More recently, Intel started using the idea under the name EPIC (Explicitly Parallel Instruction Computing). EPIC sounds more like RISC or CISC (Complex Instruction Set Computing), but I'm going to stick with the name VLIW because that's what the rest of the world has been calling it all along.

In either case, the trick is to group a bunch of RISC instructions together in batches, with the first instruction in each batch getting fed to the first processor core, the second instruction to the second core, and so on. These batches are Very Long, and these batches are Explicitly Parallel, so both names make sense.

If you'll remember from yesterday, in a RISC design, the instructions have to come in the right order so that the second processor core could snatch up instructions going by that were independent of what the first core was doing. But anything the second core did was really just a bonus that saved time, and if the second core didn't execute the instruction the first core would get to it on the next clock cycle anyway. The second core was merely a helper that constantly had to look at what the first core was doing to make sure that performing the bonus instruction wouldn't interfere with anything.

In VLIW, each processor core can get its own instruction every clock cycle, no matter what -- period. When a core has nothing to do, it will get an instruction telling it to do nothing. On a four-core VLIW chip, each VLIW instruction consists of four RISC instructions glued together, one after the other. The chip doesn't decide which instruction goes to which processor core, that's decided when the program is created.

So the programming tools that create the program have to be fairly clever. "Compilers" are programs that take human-readable instruction lists (a bit like the recipes from yesterday) and translate them into a bunch of numerical instructions the hardware can perform. RISC took a while to take off for Sun (Nasdaq: SUNW) and DEC, because clever compilers capable of optimizing the instruction order weren't available until long after the actual chips shipped. Intel got around this by doing the translation and reordering in hardware, which was a great trick, but that was a heck of a job to do for just two processor cores. Intel isn't even TRYING to do IA32 (Intel Architecture 32-bit) translation in hardware for more cores. Instead, it designed them to use VLIW software.

Merced

Intel's upcoming chip design used to be called "Merced," but now seems to have been renamed "Itanium." If nothing else has changed, each processor will have the standard cache fed by a prefetch unit we've come to expect on modern chips, and from this pool five different processor cores will be drinking.

One processor core will be the good old "IA32 translation and two RISC pipelines on a sesame seed bun" combo from the Pentium. This is for running all the IA32 software everybody has now. And if it outperforms the Pentium by much, it'll probably be because it has a larger cache and maybe a bigger memory bus feeding into the prefetch unit. Other than that, it's just a normal Pentium.

Another core is for executing PA-RISC instructions, which is a processor from Hewlett-Packard (NYSE: HWP), who codesigned Merced with Intel. For 99% of the time, this circuitry will sit there idle with nothing to do because most of the world isn't running PA-RISC programs.

The other three cores run a new 64-bit instruction set Intel invented, which strangely enough is called "IA64." Why move to 64-bit instructions? Because the largest number 32 bits can store is a little over four billion, and most hard drives have more than four billion bytes these days. (One billion bytes is a gigabyte -- hands up everybody with drives larger than four gigs.) Many high-end servers even have more than four billion bytes of RAM (Random Access Memory). Not being able to count above four billion is getting a bit limiting. Lots of other chip designs, such as the DEC Alpha now owned by Compaq (NYSE: CPQ) and Sun's UltraSparc, have been 64-bit for years. Intel has simply decided to follow the crowd.

So a Merced processor can run normal IA32 instructions, or it can run IA64 instructions in batches of three per clock cycle, or it can run PA-RISC instructions. But of those three different modes the thing can be in, it can only do one at a time. No matter how things work out, half the circuitry on the chip will sit idle at any given time, providing compatibility with a type of instructions the chip isn't running at the moment. Needless to say, this isn't exactly an elegant solution.

The other problem is that programs need to be recompiled for IA64 to use the full capabilities of the chip. This isn't something the end user can do for themselves unless they're using Open Source software; most people will have to get a new version from their software vendor. And not only will the software have to use a new compiler that can produce IA64 instructions, but any assumptions in the software about how large numbers can get will have to be located and fixed. Microsoft (Nasdaq: MSFT) even has to come out with a new version of Windows, and since it's abandoned support for all processors except IA32 ones (even the DEC Alpha port has been abandoned), it might take them a while to get out a "64-bit clean" version.

Intel is hedging its bets on Merced with a big push behind an IA64 port of Open Source software like Linux. Linux already worked on 64-bit chips like Alpha and UltraSparc, so it had no trouble with the 64-bit nature of Merced, and Intel demonstrated a version of Linux running on Itanium hardware at LinuxWorld Expo earlier this month. Intel will ship Itanium in the second half of this year. Microsoft has promised a compatible version of Windows to be available at the same time, but it will probably mostly be the same IA32 Windows it's shipping right now. If that happens, Itanium won't run Windows much faster than a Pentium. But even if that happens, Intel can still sell Merced to the Linux crowd without writing any more software than what's out there today. (See from ZDNet: Linux: Itanium's great 64-bit hope?)

To sum up Merced, I think Intel's transition from IA32 to IA64 instructions is less than graceful, but I'm looking forward to 64-bit chips that can handle more than 4 gigabytes of RAM and files longer than 4 gigabytes without having to jump through hoops. My other real gripe with the chip is that Intel's decision to handle the backward compatibility issue by sticking in processor cores that don't get used when running IA64 software isn't exactly elegant. Eventually, when we do get IA64 software, that's just wasted circuitry.

Transmeta's Crusoe

For an outright clever solution to feeding VLIW instructions to multiple processor cores without sacrificing IA32 compatibility, let's look at Transmeta's approach. They basically took Intel's translation layer idea from the Pentium and decided to do it in software instead of in hardware.

The Crusoe translates IA32 instructions to VLIW in software and reorganizes the resulting code to keep all the processor cores busy. This is done by a VLIW program Transmeta calls the "Code Morphing" layer (very similar to Sun's Java JIT compiler). Instead of translating each instruction every time it sees it, the Crusoe keeps large chunks of translated instructions around in memory (actually in the CPU cache, which the Crusoe VLIW instruction set can address manually). This way, the Crusoe can spend more time executing translated instructions rather than retranslating them, and if it finds itself executing the same piece of code over and over, it can go back and put extra effort into optimizing it and reorganizing the instructions and such.

The other really cool thing about Transmeta's chips is their lack of heat. They were designed from the ground up for use in portable devices, and thus use much less power than anything else. (In part, this is because they need a lot less circuitry than a Pentium does because they moved so many tasks from hardware to software.) A Crusoe chip only uses one watt of power to run at full speed, and can scale back both its clock speed and its voltage to slow down when it doesn't need that much. What determines how much speed is needed, and controls the voltage and the clock? The code morphing layer, of course. So Crusoe goes in your wristwatch without draining the battery, rather than in a desktop with a big power supply and heat sinks everywhere.

But one big reason I think the Code Morphing approach is superior to what Intel is doing is that the underlying hardware can change. Intel is going through a big shock to introduce the IA64 instruction set, which is important because 32 bits isn't enough anymore and programs have to be rewritten. But if it decides to add more processor cores to each VLIW bundle in the future, programs will have to be recompiled AGAIN. Isolating those kind of details behind a layer of housekeeping software is just a whole lot tidier.

This is why Transmeta has been getting so much news lately. (OK, that and a bunch of brilliant people like the inventor of Linux and the co-author of the game Quake work there.)

By the way, several people have e-mailed me to clarify the lineage of RISC, mentioning people like John Cocke at IBM, Dave Patterson at Berkeley, and Hennessey at Stanford. The RISC designs we take for granted today include many ideas, from simplifying the circuitry in order to make manufacturing easier, to complex optimizations. In case you haven't noticed, techies may not be all that interested in ownership of ideas, but we're sticklers for correct attribution.

Finally, this weekend's Fool Radio Show is returning to the debate over AOL vs. Yahoo! Which of the two Internet media giants will be the better investment over the next three years? We've set up a poll to tabulate what Fools the world over think on this matter. Vote here, then tune into this week's show to hear Tom's and David's opinions.

Have a great weekend!

-Oak

Inside Intel Again: Merced vs. Crusoe

You’re reading a free article with opinions that may differ from The Motley Fool’s Premium Investing Services. Become a Motley Fool member today to get instant access to our top analyst recommendations, in-depth research, investing resources, and more. Learn More

Rob Landley compares Intel's Merced chip design to Transmetta's Crusoe chip design and, uh oh, finds Transmetta's design to be superior.

Invest Smarter with The Motley Fool

Join Over Half a Million Premium Members Receiving…

- New Stock Picks Each Month

- Detailed Analysis of Companies

- Model Portfolios

- Live Streaming During Market Hours

- And Much More

Motley Fool Investing Philosophy

- #1 Buy 25+ Companies

- #2 Hold Stocks for 5+ Years

- #3 Add New Savings Regularly

- #4 Hold Through Market Volatility

- #5 Let Winners Run

- #6 Target Long-Term Returns

Why do we invest this way? Learn More

Related Articles

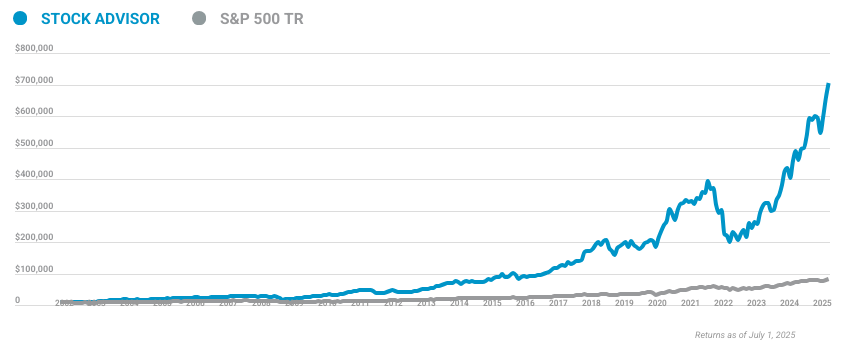

Motley Fool Returns

Market-beating stocks from our award-winning analyst team.

Calculated by average return of all stock recommendations since inception of the Stock Advisor service in February of 2002. Returns as of 04/24/2024.

Discounted offers are only available to new members. Stock Advisor list price is $199 per year.

Calculated by Time-Weighted Return since 2002. Volatility profiles based on trailing-three-year calculations of the standard deviation of service investment returns.

Premium Investing Services

Invest better with The Motley Fool. Get stock recommendations, portfolio guidance, and more from The Motley Fool's premium services.