Imagine you're trying to impress your friends with a newly learned card trick, but instead of mastering a reusable trick, you memorize the sequence of an entire deck of cards. Bravo! You're the star of the show -- until someone shuffles the deck, and you don't have a trick anymore.

This, in a very simplified nutshell, is the idea behind overfitting in machine learning.

What is Overfitting?

Think of overfitting as the overzealous student in a classroom who, in a bid to outshine peers, learns every peculiarity of the teacher's test patterns but fails to grasp the general principles of the subject. Similarly, overfitting in machine learning is when an algorithm tries too hard. It performs impressively on the training data, fitting it perfectly like a glove. But when faced with new data (the true test!), it stumbles.

Why? Well, because it also learned the noise, outliers, and random fluctuations of the training data. All of this can help the system reconstruct its training data set to perfection, but it can be a problem when applied to new situations and fresh data.

And this potential problem makes its presence known in all facets of machine learning. Developers must account for the overfitting risk, whether they are building systems for supervised learning, unsupervised learning, semi-supervised learning, or designing neural networks for deep learning. All of these approaches can deliver poor results due to a weak or unbalanced bucket of training data.

Why should you care about overfitting?

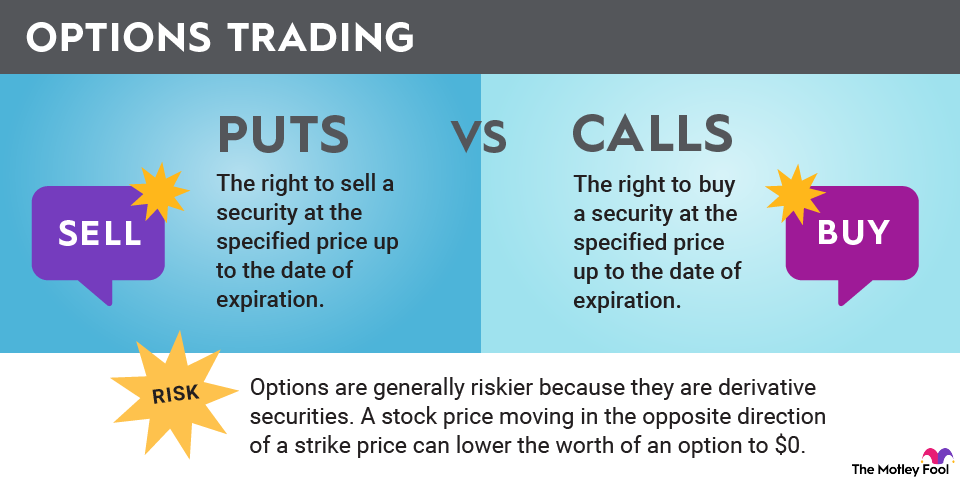

Let's think about overfitting in the context of investing. You wouldn't put all your money on a single stock just because it's had a winning streak recently, right? Of course not. That's because you understand the principle of diversification, and you know that a strong set of earlier results don't necessarily lead to similar gains in the future.

Overfitting is the algorithmic equivalent of betting it all on one stock, based on financial data and stock charts from the past, with no regard for recessions and other changing market environments, new innovations, more or fewer competitors, etc.

In the machine learning case, the algorithm bets it all on the specific training data. The system ends up building a prediction model that fits that data but does poorly when facing information from outside that specific data set.

This is important because an overfit model is a deceptive model. It may look like it's doing wonders as long as your tests stick to the training data. But in reality, its predictive power is about as reliable as a magic eight-ball. Overfitting undermines a machine-learning model's ability to generalize the lessons learned so they can apply to new input from previously unseen data sets. In the world of machine learning, generalization is the name of the game.

How to skirt the overfitting quagmire

Now you know why an overfit machine learning system is about as helpful as a solar-powered flashlight without batteries -- it only works when you need it the least. So what can you do about it? As it turns out, the inventors of modern machine learning systems have come up with some nifty techniques to avoid this problem.

- Cross-validation: This technique involves dividing the data set into a number of subsets. The model is trained on some of these subsets and then validated on the remaining ones. This process is repeated several times with different combinations, providing a robust estimate of the model's performance on unseen data.

- Early stopping: This involves halting the training process before the model starts to overfit. Essentially, you monitor the model's performance on a validation set during training and stop training when performance begins to degrade. The most advanced machine learning model isn't always the best one.

- Dropout: This is a technique used specifically in neural networks. It involves randomly "dropping out" or turning off certain neurons during training. The system must route the data flow through a different digital neuron path, which helps prevent an undesired focus on seemingly helpful but potentially misleading data relationships.

- Data augmentation: This involves creating new synthetic training examples by applying transformations to the existing data. For instance, you might flip or rotate images in a computer vision task. This increases the size and diversity of the training data and helps the model generalize better. If a landscape-tagging algorithm can tell a mountain range apart from an upside-down ocean wave, you might be on the right track.

Remember, each of these techniques has its place and might be more or less effective depending on the specific nature of the data set and the problem at hand. A successful machine-learning system will probably use several anti-overfitting techniques because there is no silver bullet that stops every potential problem.

Like most things in machine learning (or investing, or life), it's an art as well as a science.

Related investing topics

An overfitting tale from the trenches

In 2006, before Netflix (NFLX +1.76%) unveiled its digital video-streaming catalog as a free add-on feature to its red DVD mailer service, the company launched an ambitious data-mining competition. The Netflix Prize taught the company many lessons over the next three years -- but not exactly the ones it wanted to learn in the first place.

The reason for this unexpected outcome was, of course, overfitting.

Netflix wasn't blind to the overfitting risk, of course. Competitors had access to 100 million movie ratings of 17,000 movies provided by 480,000 Netflix subscribers. Another 3 million ratings were held in a separate list that the programmers never saw directly. This was the cross-validation data set where the computing models were tested and scored against a clean data set. The task at hand was to come up with a movie recommendation algorithm that could outperform Netflix's existing system by at least 10%.

The winning team, BellKor's Pragmatic Chaos, was a coalition of three elite performers, combining the machine learning approaches of more than 50 radically different analytic approaches. In second place, more than 30 teams combined under the self-descriptive name, "The Ensemble," with 48 sophisticated machine learning models under its belt.

Attempts involving just a few analytic approaches never stood a chance against these diverse giants. The two top teams tied the final scoring round with a 10.06% improvement over Netflix's own movie recommendations model. BellKor won by submitting its final entry 22 minutes before The Ensemble.

However, Netflix never adopted BellKor's recommendation system. As you might expect at this point, both BellKor and The Ensemble performed slightly worse in the validation round, proving that even the best machine learning systems couldn't deliver truly data-agnostic prediction models.

Instead, the company was happy to spend the $1 million prize money in return for many technical ideas, along with a demonstration of diversified analytic approaches crushing single-minded methods.

"You look at the cumulative hours, and you're getting PhDs for a dollar an hour," then-CEO Reed Hastings told The New York Times. But Netflix didn't exactly get a drop-in upgrade for its movie recommendation system, as it might have hoped.