Artificial intelligence is on the rise, and hardware like accelerated computing is being used in all corners of the world. In an environment that is leaning toward economic nationalism, hardware related to compute power and the acceleration of AI developments will be a key geopolitical issue. But what does accelerated computing really mean? Is it just a computer that goes faster than normal? Let’s break it down below.

Understanding accelerated computing

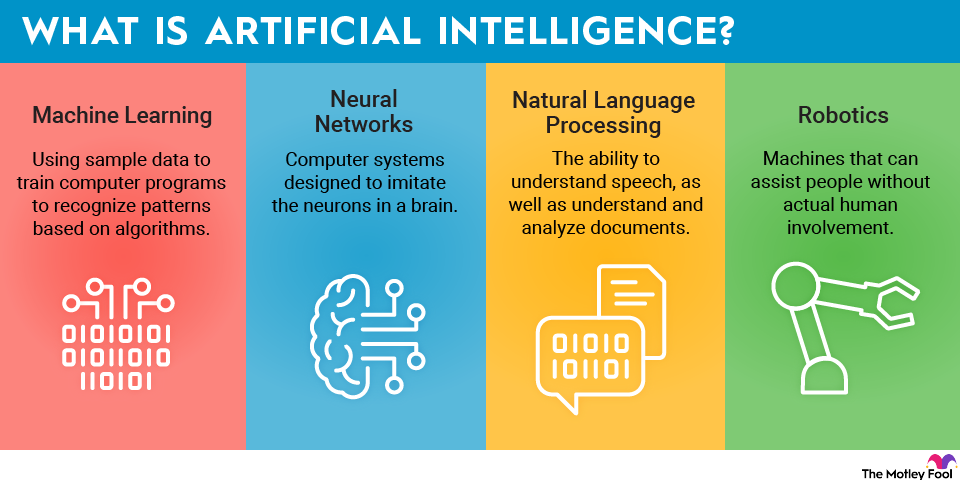

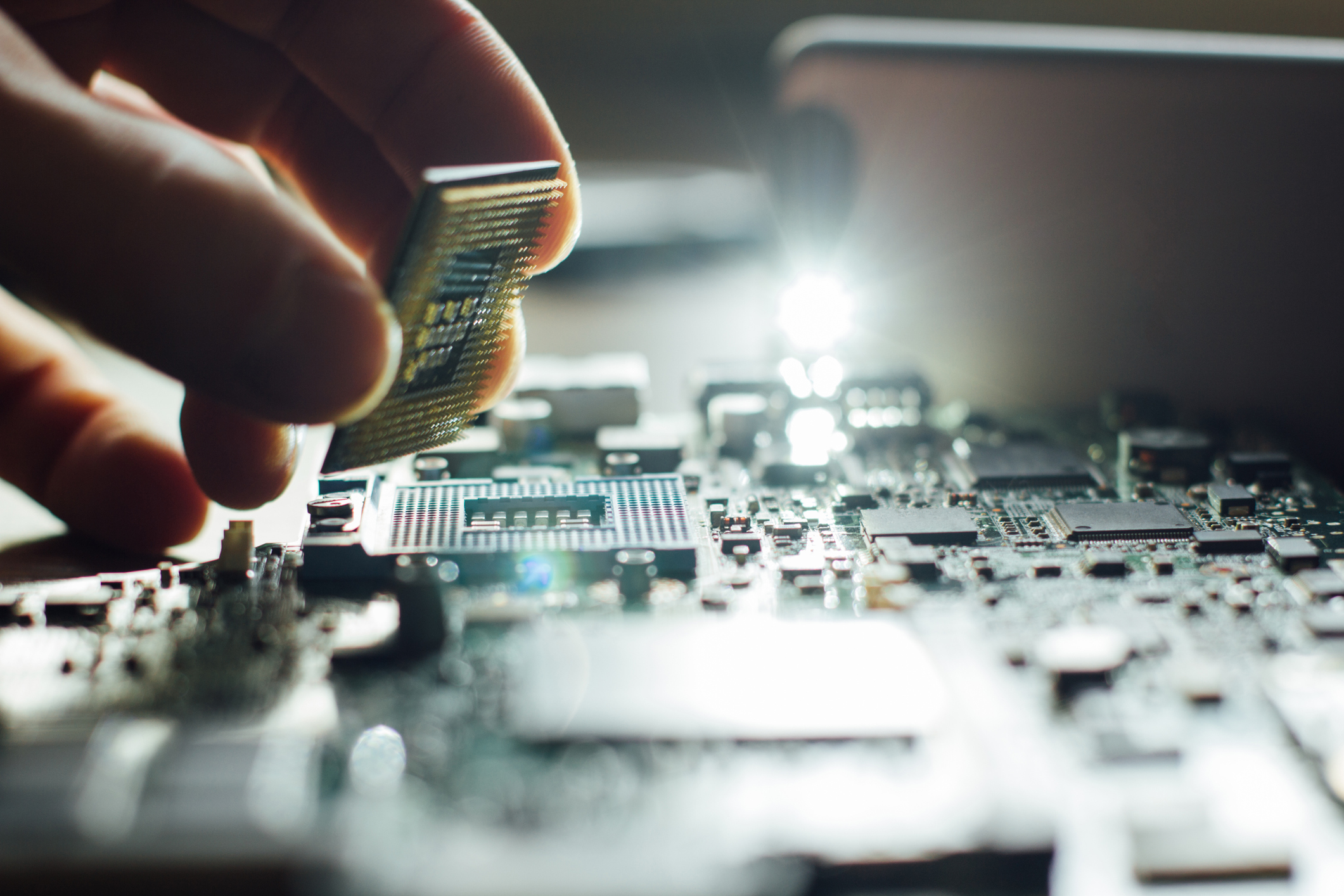

Accelerated computing refers to the use of specialized hardware to perform certain tasks faster than a traditional central processing unit (CPU) could on its own. It revolves around adding hardware accelerators like graphics processing units (GPUS), tensor processing units (TPUs), or field-programmable gate arrays (FPGAs) to work in tandem with general-purpose CPUS.

Instead of relying solely on the CPU to do all the heavy lifting, specific tasks are offloaded to these accelerators. For example, GPUs are particularly good at handling parallel operations, which makes them ideal for graphics rendering, machine learning, and scientific simulations. TPUs, which were originally developed by Google, are optimized for neural network workloads, while FPGAs can be customized for more specific computing tasks like financial modeling or video encoding.

Why accelerated computing matters

Traditional CPUs like the one you have in your living room or office are powerful, but they are also very general. Workloads have become more specialized, like LLM training or simulating protein folding for drug discovery. The energy consumption needed for AI is infinite, so the need for tailored processing power has grown exponentially.

Many of the world’s most important breakthroughs in AI, healthcare, climate modeling, and autonomous vehicles rely on accelerated computing. It’s what allows self-driving cars to interpret their surroundings in milliseconds and helps researchers run simulations that would take weeks on conventional systems. The impact is especially noticeable in terms of energy efficiency. Accelerated systems can perform tasks using less power because they’re optimized for the job. For businesses running massive data centers, this can be a huge boon to increasing energy efficiency and driving down consumption costs.

How to take advantage of accelerated computing

Use cloud services to rent acceleration

You don’t need a supercomputer tucked away in your garage to access accelerated computing. Services like Amazon's (AMZN +0.21%) AWS, Alphabet's (GOOG +1.55%)(GOOGL +1.47%) Google Cloud, and Microsoft's (MSFT +0.22%) Azure let companies rent time on GPU- or TPU-backed servers. The rentals can allow a small biotech startup, for example, to run protein simulations in days instead of weeks, without needing to spend millions on custom hardware.

Use existing libraries to help set it up

If you have access to the right hardware or cloud services, as mentioned above, the next step is using software that knows how to talk to it. Existing libraries and frameworks like CUDA, TensorFlow, and PyTorch are designed to run on GPUs and other accelerators. If you were training an AI model or running complex data analysis, using one of these libraries could cut hours off your processing time, without needing to rewrite everything from scratch.

Gain exposure to accelerated computing through investment

Investing in accelerated computing means betting on the future of high-speed data processing, AI, and real-time analytics. Publicly traded companies like Nvidia (NVDA +3.80%), Advanced Micro Devices (AMD +6.17%), and Broadcom (AVGO +3.12%)are at the core of this transformation, building the chips and hardware that power everything from self-driving cars to AI research. While individual stocks offer strong growth potential, exchange-traded funds (ETFs) such as Global X Robotics & AI (BOTZ +1.70%) or iShares Semiconductor ETF (SOXX +2.66%) provide diversified exposure with less volatility. For investors looking to ride the accelerated computing wave without picking winners, these funds can be a smart entry point.

Related investing topics

How Nvidia is taking the lead on accelerated computing

Nvidia has been one of the fastest-growing companies in recent memory, and its forays into accelerated computing have put the world on notice. Originally a graphics card company, Nvidia GPUs now play a central role in AI model training and data center infrastructure. For example, the famous ChatGPT-4, developed by OpenAI, was trained using Nvidia graphics processors, which dramatically sped up the process of running and updating the model’s parameters.

Nvidia’s hardware has completely reshaped how fast computers can think in real-time. Their A100 and newer H100 chips are used in everything from training LLMs to powering self-driving cars, because they handle massive amounts of data much faster than regular CPUs. With tools like CUDA, even smaller teams can tap into that level of compute power without being expert-level programmers.