The massive spending on artificial intelligence (AI) infrastructure has created several winners in the past three years. From Nvidia to Broadcom to CoreWeave to Nebius, several companies are seeing a remarkable surge in their growth rates and stock prices.

That's why anyone looking to buy an AI infrastructure stock right now will most likely be paying an expensive multiple. For instance, all the names mentioned above have either high sales or earnings multiples, or both. However, there's one stock that can still be bought at an extremely cheap valuation despite its stunning growth rate.

Micron Technology (MU +3.70%) makes compute and storage memory chips that go into several applications such as data centers, personal computers, smartphones, and automotive components. Its business has taken off thanks to AI. But Micron's valuation suggests that its growth potential isn't fully priced into the stock yet.

Let's take a closer look at the reasons why this semiconductor stock seems like the last great value play for anyone looking to capitalize on the massive investments in AI infrastructure.

Image source: Micron Technology.

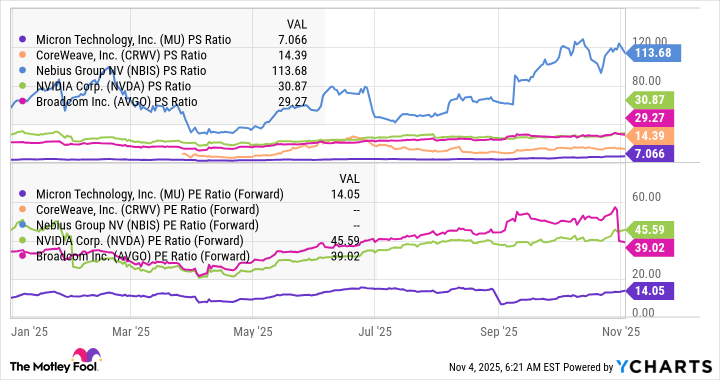

Micron is way cheaper than other AI infrastructure stocks

Before diving into Micron's valuation, it would be ideal to take a closer look at the company's incredible growth. The company released its fiscal 2025 results (for the year ended Aug. 28) in September, reporting a 49% year-over-year spike in revenue to $37.4 billion and a whopping 537% increase in adjusted earnings to $8.29 per share.

Even with those impressive results, Micron can be bought at just 24 times trailing earnings. Its forward earnings multiple of 14 is even more attractive, while the price-to-sales ratio of 7 is also well below other AI infrastructure stocks.

Data by YCharts.

Micron's forward earnings multiple makes it clear that analysts expect the stock to deliver another year of solid growth. As it turns out, consensus estimates project Micron's earnings to double this fiscal year. The good part is that Micron is likely to sustain its healthy growth levels in the long run as well because of one simple reason.

The lucrative opportunity that could send this AI stock higher

Of late, investors have started appreciating the terrific growth potential of Micron. This is evident from the 179% spike in the stock price this year. But the fact that it is trading at an inexpensive valuation despite this surge indicates that it is still underrated when we consider the massive end-market opportunity that the company can benefit from.

NASDAQ: MU

Key Data Points

AI chip giant Nvidia projects that the capital spending on data centers could increase at a compound annual growth rate (CAGR) of 40% between 2025 and 2030. The annual data center capital expenditures (capex) could land between $3 trillion and $4 trillion by the end of the decade. Nvidia points out that this massive outlay will be driven by the shift to accelerated computing powered by graphics processing units (GPUs) as compared to traditional computing carried out by central processing units (CPUs) in data centers.

Accelerated computing will play a critical role in tackling AI workloads in the cloud. That's because accelerated computing has the ability to handle bigger workloads in data centers in a shorter period of time, thereby reducing energy consumption. And to enable accelerated computing in data centers, the processing chips need to be equipped with high-bandwidth memory (HBM).

Micron estimates that the HBM market's revenue could double in 2025 to $35 billion. Micron peer SK Hynix is forecasting a 30% annual increase in HBM revenue through 2030. However, don't be surprised to see the HBM market grow at a faster pace than that considering the estimated increase in data center capex, as well as the deployment of these chips beyond just GPUs.

While Nvidia and AMD have already been packing huge amounts of HBM into their data center GPUs, custom AI chip designers such as Broadcom and Marvell Technology have also joined the fray. This isn't surprising, as the ability of HBM to move huge amounts of data (owing to its higher bandwidth) with lower power consumption and latency makes it ideal for accelerating data center workloads.

Micron is capitalizing on this huge opportunity already. Its HBM is used by six customers, suggesting that all the major AI chip designers are using its memory product for deployment in data centers. Another point worth noting is that Micron is reportedly gaining ground in the HBM space. According to one estimate, it controlled 21% of the HBM market in the second quarter, jumping into second place ahead of Samsung.

Micron is expected to increase its HBM share to a range of 23% to 24% by the end of the year. There is a chance that Micron could gain more share of the HBM market thanks to its next-generation HBM offering. On its September earnings call, Micron management remarked, "We believe Micron's HBM4 outperforms all competing HBM4 products, delivering industry-leading performance as well as best-in-class power efficiency."

So, the chipmaker is likely to benefit from a combination of higher market share and the secular growth of the HBM market over the next five years. All this could position Micron as a top AI infrastructure play that investors can buy and hold for the long run, and its valuation makes it clear that doing so is a no-brainer right now.