OpenAI's commercial launch of ChatGPT on Nov. 30, 2022, ignited a seismic structural shift in the technology landscape. In particular, demand for enterprise productivity software and collaborative chat tools took a back seat to an intense focus on hardware -- notably, graphics processing units (GPUs) and networking equipment for data centers.

Surging infrastructure demand propelled chip stocks such as Nvidia, Advanced Micro Devices, and Broadcom to record highs over the last few years. Since ChatGPT's release, each of these semiconductor stocks has handily outperformed the S&P 500 and Nasdaq Composite.

One stock that has performed respectably but has underperformed its peers in the chip space is Micron Technology (MU +0.12%). In my view, Micron's role in the artificial intelligence (AI) realm is misunderstood and largely overlooked. Hence, the stock trades at a notable discount relative to its semiconductor cohorts.

To understand Micron's strategic positioning, investors must look beyond the GPU narrative and begin focusing on the quiet, albeit enormously important, pocket of memory and storage in AI.

NASDAQ: MU

Key Data Points

Micron plays an important role in the AI infrastructure stack

Over the last few years, chances are you've been inundated with references to a host of large language models (LLMs) such as ChatGPT, Claude, Anthropic, Perplexity, DeepSeek, Alphabet's Google Gemini, Mistral AI, and Meta Platforms' LLaMa. This family of models has spurred generational demand for GPUs and custom ASIC chips -- an obvious tailwind for the likes of Nvidia, AMD, and Broadcom.

What often goes unnoticed is that as each generation of AI model becomes more sophisticated, hyperscalers such as Meta Platforms, Microsoft, Amazon, and Alphabet won't just be spending more on GPUs to process their workloads. Another crucial allocation for AI capital expenditure (capex) budgets is memory and storage. As model training and inference are pushed to the limit, additional high-performance computing (HPC) memory and storage solutions are required to satisfy rising capacity and bandwidth thresholds.

This fits squarely into Micron's wheelhouse. The company's high-bandwidth memory (HBM), dynamic random access memory (DRAM), and NAND flash memory systems offer developers a vertically integrated AI memory stack. HBM provides the bandwidth layer GPUs need for training, while DRAM and NAND support processing and storage requirements for growing data workloads.

Image source: Getty Images.

Here is what the market is missing about Micron

In general, the semiconductor industry tends to exhibit cyclicality. Investors dislike uncertainty, so when demand trends are hard to forecast or financial guidance appears lumpy, investors view the company as high risk and apply lower valuation multiples to the business. At its core, I think this sentiment is holding Micron stock back at the moment.

In the past, Micron relied heavily on PC and smartphone upgrade cycles to drive demand. The association with unpredictable, consumer-centric retail cycles has fueled a belief that Micron lacks the same secular tailwinds enjoyed by its GPU-focused peers. I think this logic is misguided and no longer holds true, though.

A new chapter in the AI narrative is swiftly being written -- one featuring sustained, rising infrastructure and capex spend from the largest generative AI developers. As AI training and inferencing continue to scale, big tech will map out detailed, multi-year buildout plans for data centers and GPU clusters. These demand spikes will not be transitory but rather structural commitments that unfold over the next several years.

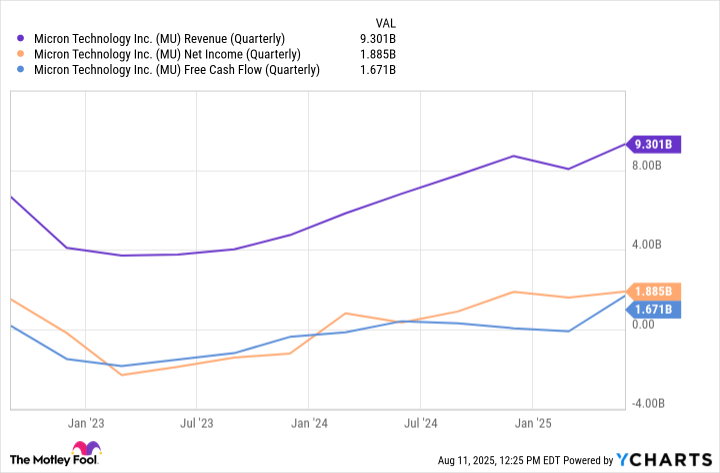

MU Revenue (Quarterly) data by YCharts.

This bodes well for Micron, as more robust memory platforms are becoming mission-critical necessities as opposed to a "nice to have" enhancement. In the long run, this should smooth out some of the volatility in Micron's financial profile and help transform its memory and storage business into a foundational layer on which AI infrastructure applications are built.

Is Micron stock a buy right now?

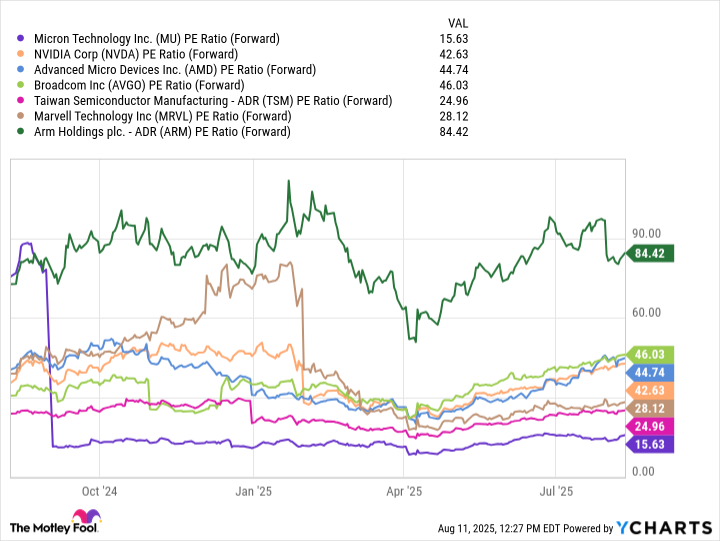

The comparable company analysis below includes a number of established businesses playing critical roles across the chip landscape. Based on the forward price-to-earnings (P/E) multiple, Micron is by far the cheapest stock in this peer group. I find this valuation disparity a tad ironic, as Micron is positioned to benefit from the same hyperscaler infrastructure spend that also drives meaningful growth among its peers.

MU PE Ratio (Forward) data by YCharts. PE Ratio = price-to-earnings ratio.

In my eyes, the disparity in valuation trends could suggest that the market underappreciates Micron's role in the chip realm and may see its memory services as a commoditized infrastructure solution. To me, the theme here is that as the hyperscalers boost their capex budgets to include more memory and storage solutions, Micron is well positioned for meaningful revenue acceleration and widening profit margins.

In the AI landscape, I see Micron as a rare example that carries compelling growth prospects but is trading more akin to a value stock. For this reason, I consider Micron a no-brainer opportunity that should not be ignored by growth investors with a long-term time horizon.