The world seems to have been taken by storm by artificial intelligence tools that can write emails, create art, and perform other complex tasks. One type of tool, large language models, learns how to create sentences that can be used in myriad ways.

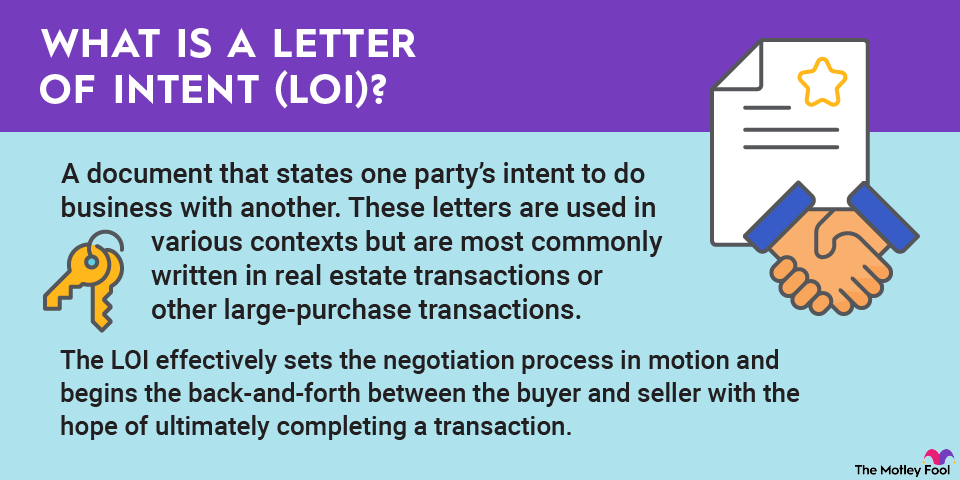

What are large language models?

Large language models (LLMs) are a type of artificial intelligence (AI) that's trained to create sentences and paragraphs out of its training dataset. Unlike other AI tools that might predict word choice based on what you've already written, LLMs can create whole sentences, paragraphs, and essays by using their training data alone.

Although LLMs were designed as language-predicting models, they are also being trained to be used in a wide range of applications, from customer service chatbots to friends to the lonely and even as personal assistants that can generate simple letters and emails.

How do large language models work?

Large language models, as well as other forms of artificial intelligence, are clever computer programs, but they're just that -- computer programs. They have to be taught how to do their jobs from the ground up. Here's how that works:

- Training. During the training phase, LLMs analyze trillions of words in order to understand how language works. The better the datasets, the better the results, as in other machine learning models. With an LLM, language data is fed into the system without specific instructions, allowing the system to learn about context, word meanings, and the relationship between words in a given language.

- Fine-tuning. Depending on the task the LLM is meant to perform, it will have to undergo some fine-tuning to ensure it's doing the job properly. For example, if it's meant to do translation, a human translator will work with it to ensure it's translating correctly, correct it when it makes a mistake, and help shape the information used by the LLM.

- Prompt-tuning. Prompt-tuning is a lot like fine-tuning; it helps guide the LLM along its way to full development. Unlike fine-tuning, which optimizes specific tasks, prompt-tuning helps the system to learn to anticipate when specific types of data are needed by learning "prompts," such as "Answer the question" or "Can you help me." Much like how humans infer there's a question coming by tone of voice, prompt-tuning can help a general-purpose LLM understand what is expected of it.

Machine Learning

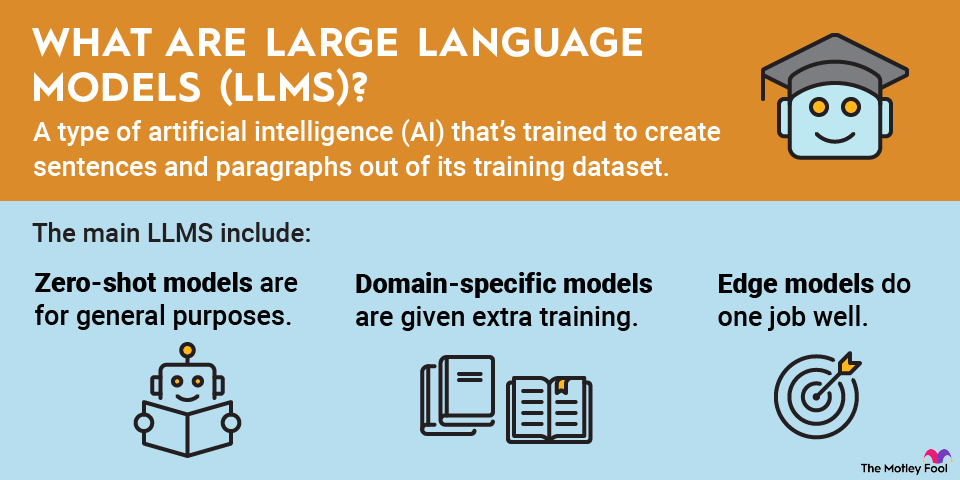

Types of large language models

Although it might seem like all large language models are essentially the same, there are huge differences in how they can be used and trained. The main models include:

- Zero-shot models. These are LLMs that are for general purposes and are trained using enormous and wide-ranging datasets. They're not meant to do any one thing and are instead expected to be able to do a lot of different things based on what the user is requesting.

- Domain-specific models. If you take a zero-shot model and give it extra training, you can make a domain-specific model. This model knows a lot about something much more specific. For example, you might build a LLM that can listen to users' problems and give supportive feedback, similar to a counselor.

- Edge models. Edge model LLMs do one job and do it very well. These tools are already in use all over the place, doing small tasks that require immediate feedback, like translating signs or helping users by suggesting words that should fit together with their sentences when composing a letter.

Concerns about large language models

Large language models are very interesting computer programs, but they've also managed to churn a good deal of controversy since they were widely introduced. A couple of common concerns involve copyrighted information and LLM hallucinations. Both may have long-ranging implications for the commercial viability of LLMs in general.

Copyright infringement

Because LLMs must be trained on enormous datasets, many LLM developers have pulled information from sources that may or may not actually be open for use in this way. For example, some are alleged to be training LLMs on social media data, while others may be using whole books or websites written by someone who has not given their permission for use in training these models.

This creates a problem when it comes to ownership. Since LLMs are software that can't legally own anything, the end result isn't treated the same as a person who is simply influenced by a particular writer, for example. When the data used to train the LLM wasn't used with permission, the information generated by the LLM may be infringing on the copyright of those influencers.

So, right now, the question has become ownership of the LLM's output. Is it the software designer who trained the software on essentially pirated content? Is it the owners of the content that was pirated? Is it a different third party?

Related investment topics

Hallucinations

Another huge concern for users and owners of LLMs is hallucinations. A hallucination occurs when an LLM gives a wrong answer to a user but with extreme confidence. Instead of simply saying it doesn't know, an LLM will give an answer it believes is statistically likely to be correct, even if it makes absolutely no sense.

This doesn't happen all the time, of course, but it happens often enough that there is a great deal of concern with trust in LLMs and their outputs. Many people have also noticed that they're terrible with numbers, often being unable to even count the words in their own output.

Of course, accuracy, hallucinations, and mathematical ability will vary between LLMs. Some models do much better than others, but they're all prone to problems of this nature since they weren't trained to fully understand what they're outputting, only what is statistically likely to make sense as a sentence in the context given.