It's time to peer into the heart of computer hardware to understand an essential component: the GPU. Don't worry; this won't get too technical, but understanding this term could be instrumental for your tech investments.

What is a GPU?

GPU stands for "graphics processing unit." This computer chip is the brain behind every visual element that your computer displays. From the colorful graphs in your stock analysis software to the latest blockbuster movie streaming on your 4k screen, the GPU is hard at work turning digital data into visible pixels.

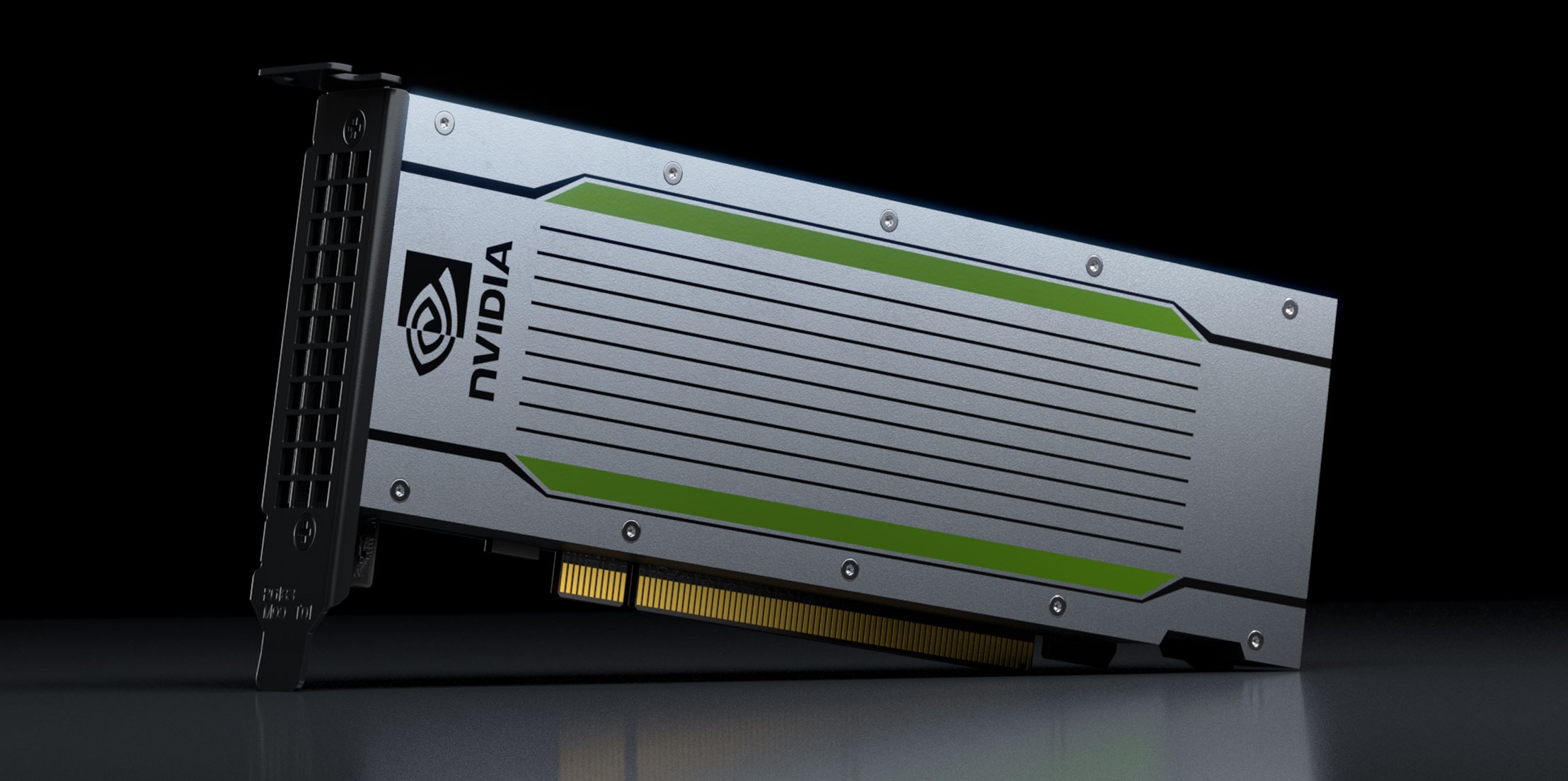

You'll occasionally see the terms GPU and graphics card used as metonyms for one another. That's a useful shorthand, but not exactly right. The two pieces of hardware are closely related, and you can't make a graphics card without a GPU. So when the two terms are conflated into a single concept, it sort of makes sense. Still, they are not the same thing, technically speaking.

The GPU is the most important chip on a fully realized graphics card. Besides the GPU, this card also contains memory chips, a cooler, and other components. You'll also typically find them equipped with a few HDMI or DisplayPort connectors, from which the right cable can transfer the generated graphics data to a monitor (or three). Think of the graphics card as a fully functional car with the GPU being the engine powering it.

Why should you care about the GPU?

Now, you might wonder why an investor needs to know about GPUs. Well, consider this: The same number-crunching horsepower that makes these chips so good at generating high-quality graphics in real time is also the best solution for many other high-performance computing tasks. The leading GPU designers, Advanced Micro Devices (AMD -17.31%) and Nvidia (NVDA -3.41%), have moved beyond the basic market for graphics cards to explore a wider range of heavy-duty computing opportunities.

The hardware used in these applications is usually not consumer-grade graphics cards but specialized computing products with lots of memory and one or more GPU chips but no ports for display output. In this form, GPUs are crucially important in several high-growth technology sectors, from video games and virtual reality to artificial intelligence (AI) and cryptocurrency mining.

GPUs have become especially essential in the AI market. Unlike CPUs (central processing units) that are great at doing a few things at once, GPUs excel at doing many things simultaneously, making them ideal for training AI models that need to process vast amounts of data quickly.

Time to take action

Knowing about GPUs and their role in driving key technology sectors can be a real game-changer for investors. Pay attention to tech companies that design and manufacture GPUs (like Nvidia and AMD), but also to firms that rely on GPUs in their technology. For example, the GPU is of central importance to AI startups, gaming companies, and some cryptocurrency platforms.

Crypto mining on GPUs isn't as popular as it once was. Bitcoin (BTC -8.15%) miners are better off using special-purpose mining chips instead, and most GPU-based mining moved to Ethereum (ETH -8.27%) many years ago.

But Ethereum's platform upgrade, completed in the spring of 2023, took mining out of the equation entirely. After that game-changing shift, GPU-powered crypto mining has to work with altcoins such as Ethereum Classic (ETC -5.22%), Ravencoin (RVN -5.53%), Monero (XMR -6.21%), and Zcash (ZEC -13.24%).

These alternatives are not as robust as Bitcoin and Ethereum, and it can be difficult to trade the not-so-popular coins you're mining. So mining crypto with a GPU isn't the name of the game anymore, but crypto usage could make a comeback, and you should be aware of this sub-market's interesting history.

Understanding the GPU also empowers you when assessing the effects of market events. For instance, a global chip shortage could affect GPU manufacturers, potentially driving up prices, which would ripple through sectors that rely on GPUs.

Related investing topics

The GPU in action: Training the ChatGPT system

To truly illustrate the importance of GPUs, let's turn to the realm of artificial intelligence. In particular, we'll look at OpenAI's large language models, GPT-3 and GPT-4. Both are powered by a large-scale machine learning model trained on a wealth of internet text, and the resulting large language models (LLMs) power the popular ChatGPT application.

But what does this have to do with GPUs?

It's simple. The training of these colossal models involves processing an astronomical amount of data, a task for which GPUs are uniquely qualified. The right chips can perform thousands of calculations simultaneously, making them crucial in creating these language models in a reasonable timeframe.

One of the key players in the GPU market, Nvidia, has been particularly instrumental in this development. The Nvidia A100, V100, and H100 Tensor Core GPUs, for instance, offer incredible acceleration at every scale for AI training and inference. Thanks to the AI training performed on these high-powered GPUs, the GPT-4 AI system can analyze and understand text on a near-human level.

AMD has its Radeon Instinct accelerators designed specifically for machine learning and AI workloads. Their GPUs are also widely used across the tech industry, powering advancements in AI and machine learning. With just a handful of exceptions to the rule, almost all of the world's most powerful supercomputers were built around either Nvidia or AMD AI-oriented GPUs.

The deployment of these GPUs isn't limited to a shadowy server farm either. They are hard at work in everyday applications like ChatGPT, where users interact with the AI models in real time. This practical application of GPU technology shows how integral they are to advancements in AI, affecting both businesses and consumers.

So understanding the role of GPUs isn't just a tech concern. This understanding directly relates to evaluating companies that manufacture GPUs, like Nvidia and AMD, and businesses that heavily use them, such as OpenAI. Knowing the basic facts about GPUs will help you make more informed predictions about these companies' performance and their impact on the tech industry.