If technology had a pulse, it would probably follow the rhythm of Moore's law. It's the unsung hero behind why our gadgets keep getting faster and better without breaking our banks. But how well do you know this famous observation? Read on for the lowdown on what Moore's law is and why it matters to investors in the semiconductor industry.

What is Moore's law?

As well-known as it is, Moore's law is also widely misunderstood. It is not a literal law, approved by Congress and signed by the president. It isn't a law of physics either, or even a theory of physics that can be tested and refined.

Instead, Moore's law started as an offhand observation by semiconductor legend Gordon Moore in 1965. Reviewing the rate of progress in the early days of microchips and semiconductors, he charted how quickly chips were becoming more complex. The number of transistor-like features per chip was doubling roughly every two years, and Moore postulated that this rate of growth could continue for the next decade.

1975 came and went, and the pace of increased complexity didn't slow down. In fact, it accelerated. The number of features per chip kept doubling every two years, but each component also offered higher performance. In total, the performance of semiconductor chips was doubling in roughly 18 months. Many people see this as the best definition of Moore's law. Other options include energy efficiency (performance per watt). So, the law has changed over the years and was never intended to force a particular rate of technological progress in the first place.

Five decades later, this idea is running into the immutable laws of physics. At some point, chipmakers would have to split atoms in order to make their semiconductor traces any smaller.

Is Moore's law still relevant?

Moore's law still holds weight since chip designers can use some tricks to keep the complexity growth going. For example, if you can't make the components any smaller, you can make the chips larger instead. Adding more chip-trace layers on top of each other can also boost the effective complexity.

I'm sure Silicon Valley has other ideas up its proverbial sleeve. Chips keep getting faster, smaller, cheaper to make, and more power-efficient. The rate of improvements may change over time, but the technology always moves forward.

Aren't computer chips fast enough already?

Likewise, the need for speed isn't going away. You know what I mean if you ever find yourself twiddling your thumbs while your computer, phone, or media center is taking its sweet time doing something important.

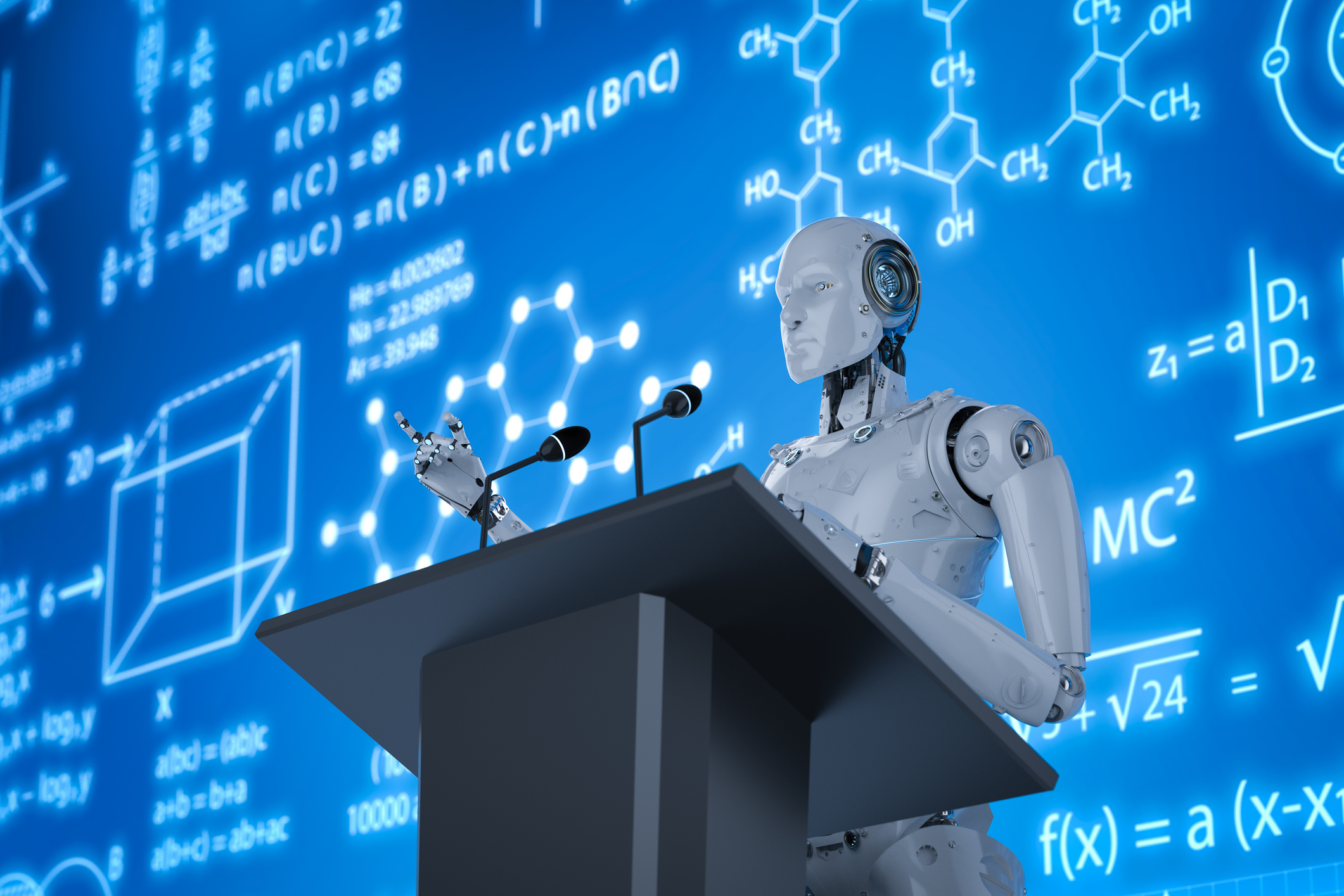

Sure, today's chips can juggle dozens of applications simultaneously, stream 4K videos without a hiccup, and support real-time multiplayer gaming. However, tomorrow's challenges are just around the corner. We're looking at a future where virtual reality could be as common as our morning coffee, where artificial intelligence analyzes massive data sets to predict global trends, and where real-time language translation happens as effortlessly as a casual conversation. These are solid use cases for computing hardware with massive horsepower.

So, there will always be a need for more silicon-powered performance. What's "good enough" today will seem quaintly slow in a few years and barely usable in a decade or two.

Keeping up with the performance demand is even more important when a quick calculation really matters. Self-driving cars must react to ever-changing traffic conditions in the blink of an eye. Fast computing can have life-or-death implications in medical monitoring systems, emergency response solutions, air traffic control platforms, and more. Computers are not always fun and games.

And so, hardware designers strive to keep Moore's law alive in some form. If that means moving the goalposts sometimes -- performance per watt, adding more processing cores instead of boosting the performance per core -- then so be it. The show must go on.