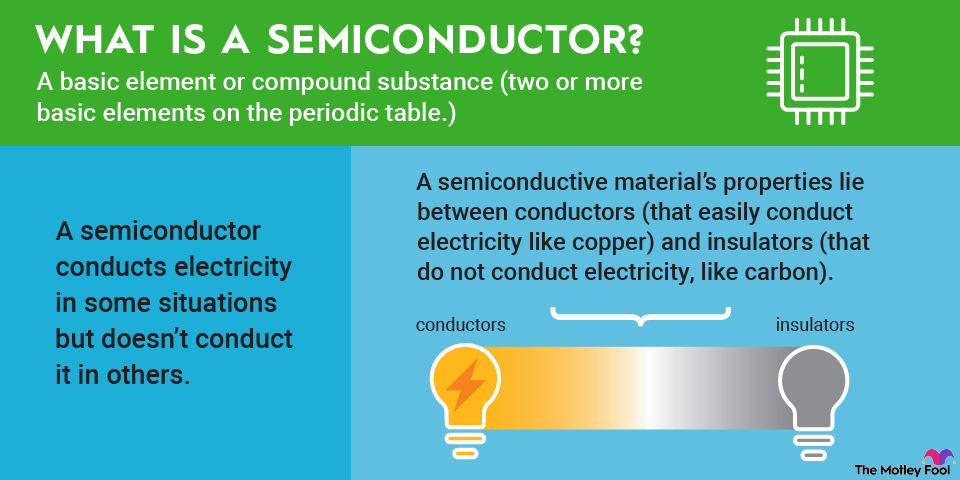

However, electricity can be used for far more than a light source. Scientists in the 1800s also experimented with how electricity behaves, discovered how different materials and environments (like altering the temperature) could affect the flow of electricity, and learned how energy could be used to accomplish other things. By the late 1800s and early 1900s, the first "semiconductor devices" were being developed in experiments to transmit sound, emit light, and detect radio waves.

Later, semiconductors were shown to be effective in amplifying or switching electric signals (a device called a transistor) and, thus, able to manage the electric current. This work on transistors laid the groundwork for all modern electronics -- including the invention of the integrated circuit (aka microchip or chip) in 1958 by Texas Instruments electrical engineer Jack Kilby.

Semiconductors rule the world

Fast forward to today, and semiconductors (chips) are at the heart of nearly everything we do. Sure, the world still relies heavily on fossil fuels to create energy, but nearly everything that happens after energy is created is governed by semiconductors. But even in energy generation itself, semiconductors are beginning to take over.

Solar panels are mostly made of silicon. Chips are used in equipment that drills for and creates electricity from fossil fuels. Energy storage and transfer (the power grid) require semiconductors. Chips regulate the electricity flowing into and within our homes, workplaces, and vehicles. The internet stores and moves data via chips. And, of course, the computing devices we rely on throughout the day -- PCs, tablets, smartphones, wearables -- are built using semiconductors. Most of the products we use, if not all of them, were made with equipment featuring lots of chips.